Even if you are new to VMware vSphere, you may not be new to the concept of NIC teaming. If you are, have no fear, we are about to take a look at VMware NIC teaming, and some ways it can be implemented in VMware vSphere 6.5. While we are going to take about VMware 6.5 NIC teaming, these concepts also apply to other versions of VMware vSphere. This is the first post in a series about VMware vSphere 6.5 networking so be sure to scroll down to the bottom of this blog for other posts in this series.

What Is VMware NIC Teaming?

NIC teaming in VMware is simply combining several NICs on a server, whether it be a Windows based server or a VMware vSphere ESXi 6.5 host. Designing and managing a VMware vSphere 6.5 environment is not necessarily difficult, but VMware NIC teaming is one concepts everyone needs to understand.

Why would we even use VMware NIC teaming? It really boils down to two reasons:

- Redundancy. My server will be able to survive a NIC card failure or a link failure and continue to pass traffic.

- Load Balancing. My server will be able to pass traffic on multiple NIC cards. If the server is busy, the traffic will not overwhelm a single NIC card, because it will be distributed in a manner we choose.

NIC Teaming in VMware – A Visual Approach

If you are a visual learner, NIC teaming in VMware is very easy to illustrate.

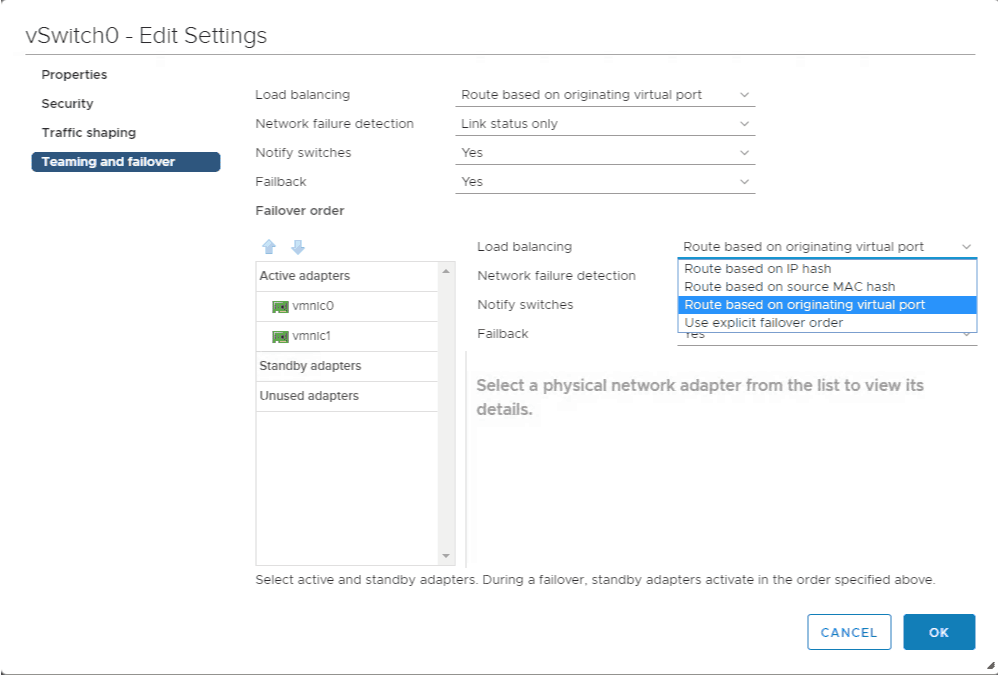

If we look at the configuration options on a standard virtual switch, or vSwitch, we will see the two important things in the Teaming and Failover settings in the VMware vSphere 6.5 networking configuration: load balancing options and failover order.

VMware NIC Teaming Options

As you can see, there are four options to chose from in a standard vSwitch:

- Route based on IP hash

- Route based on source MAC hash

- Route based on originating virtual port (the default VMware NIC teaming option)

- Use explicit failover order (read on to learn about this)

If we are working with a distributed virtual switch, we would also see an option called Route based on physical NIC load, which is a simple yet powerful NIC teaming option. Remember, a distributed virtual switch requires VMware vSphere Enterprise Plus licensing.

This article is part of a series on vSphere Networking. If you are working on a vSphere networking design, be sure to read the some of these other helpful articles:

Making vSphere Networking Design Choices – This article reviews a foolproof vSphere networking design methodology.

Introduction to NIC Teaming in VMware vSphere 6.5 Networking – This article reviews why we use NIC teaming, and goes in more detail about what the the methods are. – You are here!

The Simple Guide to NIC Teaming in VMware vSphere – This article talks about the simplest NIC teaming methods to implement: Route Based on Originating Virtual Port and Route Based on Physical NIC Load (Load Based Teaming)

The Advanced Guide to NIC Teaming in VMware vSphere – This article takes a look at the more advanced load balancing options, Route based on IP hash and route based on source MAC hash.

VMware NIC Teaming – Use Explicit Failover Order

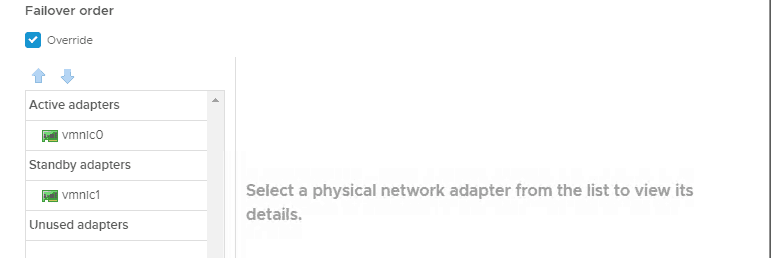

First we will take a look at one of the simplest of the Teaming and Failover options in a standard virtual switch, Use explicit failover order. With this option, there is not any load balancing performed. See the list off active adapters? With this “load balancing” method, VMware vSphere will simply use the first adapter in the active list. If this adapter fails, it will use a standby adapter.

Why would this VMware NIC teaming setting be used? Let’s say I had two NICs available for management and vMotion, and I needed to use a dedicated NIC for each. I would still want to provide some level of redundancy, so for the Management port group, I would use vmnic1 as active, and vmnic2 as standby.

For the vMotion port group I would use vmnic2 as active and vmnic1 as standby. This way if either NIC or link failed, I would still have both Management and vMotion traffic operating on my ESXi host. This is a common occurrence when it comes to VMware vSphere Networking and VMware NIC teaming.

While I have provided redundancy in this case, I have not really provided any sort of load balancing. If my NICs are fast enough, and will not become overloaded, this may be an acceptable outcome.

Making vSphere Network Design Choices

Many times, VMware vSphere networking design decisions may come down to constraints we are facing. For example, we already have server hardware to run ESXi on, and it has a set number of network ports without the ability to add more.

Constraints are things that remove our ability to make design choices. In an ideal world, we would be designing our ESXi networking from the group up, but of course this is not always the case.

As you can see by this Management port group configuration above, the VMware NIC Teaming and Failover options of the switch can be overridden for each port group by checking a box. This can be useful in some cases, but may cause harm in others.

There is a lot to consider when it comes to VMware vSphere Networking, and the technical details behind it are just one small part. If you want to get in the right mindset to start the vSphere networking design process, be sure to read this article on vSphere Networking Design Choices.

We have not even scratched the surface when it comes to VMware vSphere networking. Stay tuned, because there is more to discus with vSphere networking and VMware NIC teaming! We are also going to explore topics such as the following:

- Route based on originating virtual port and Route based on physical NIC load methods (simple)

- Route based on IP hash and MAC hash load balancing methods (more advanced)

VMware vSphere networking does not need to be complicated, it just needs to meet your requirements. By understanding the VMware NIC teaming options available, you will be able to make the best vSphere networking design choice possible.

Melissa is an Independent Technology Analyst & Content Creator, focused on IT infrastructure and information security. She is a VMware Certified Design Expert (VCDX-236) and has spent her career focused on the full IT infrastructure stack.