We have briefly talked about NIC teaming in VMware vSphere before, and why it is such a powerful tool in our vSphere aresenal. If you need a quick refresher, NIC teaming in VMware vSphere is important for both redundancy and load balancing. Now, we are going to simplify NIC teaming to the most basic form in vSphere, the default settings. I call it simple since we do not need to change anything out of the box.

Ready to take a look at how this works?

Route Based on Originating Virtual Port

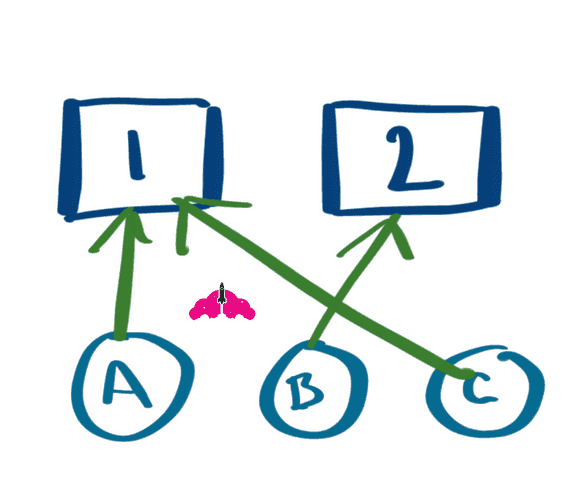

The default load balancing algorithm is called “Route based on originating virtual port”. This simply means VMware vSphere will assign the virtual ports of the virtual machines to the next uplink, as illustrated in the following diagram. When I say uplink, I am talking about the physical network port on the ESXi host which is connected to a software based virtual switch.

The following diagram explains how the VMware route based on originating virtual port algorithm works.

This is great, because virtual machines will be evenly distributed across our available uplink ports. This is not so great, because because this simple load balancing method has no idea bout the utilization of the uplink ports.

If you decide to learn about how only one NIC teaming algorithm works in VMware, route based on originating virtual port should be it. Why you ask? Simple. First of all, it is the default NIC teaming method in VMware. Secondly, there are other NIC teaming o algorithms that use this as their first line of defense. Read on to find out more about this.

vSphere Enterprise Plus Saves the Day With Enhanced Networking

If you are a vSphere Enterprise Plus customer, you are in luck! One of the hallmark features of vSphere Enterprise Plus is the ability to use the VMware vSphere Distributed Virtual Switch. The Distributed Virtual Switch is a special vSwitch that is configured centrally for your VMware vSphere hosts.

ESXi hosts are then connected to the VMware vSphere Distributed switch, and you can be assured they all have the same virtual networking configuration.

This is useful in environments that use features like VMware vMotion, since we know a VM will be able to access its assigned Port Group on each and ever vSphere Host connected to the Distributed Virtual Switch.

Route Based on Physical NIC Load or Load Based Teaming (VMware LBT)

As we mentioned previously, vSphere Enterprise Plus has an additional load balancing algorithm called “Route based on physical NIC load”, commonly referred to as LBT or Load Based Teaming. Load Based Teaming is available when using a distributed virtual switch. It is also quite simple because it starts out working the same way as the default load balancing algorithm.

Remember what we said the big problem was with that? Load Based Teaming solves this by becoming aware of the load on each uplink port. If an uplink port reaches 75% utilization over a 30 second period, the busy VM is moved to another uplink.

VMware LBT only requires minimal configuration on your virtual switch (simply selecting “Route based on phyiscal NIC load”). It is quite simple since it provides load balancing without needing to configure any upstream components.

Once LBT is selected, virtual machines will be evenly balanced across your uplink ports, and VMs will be moved to another uplink port if they get busy.

Load Based Teaming, along with Route Based on Originating Virtual Port, are the simplest methods of NIC teaming in VMware, since they do not require any additional configuration. With Load Based Teaming, you can simply turn it on and your traffic will be balanced across your NICs since it is so intelligent.

Use Explicit Failover Order

While the option to use explicit failover order is available when it come to configuring your VMware load balancing for your VMware NIC team, it is very self explanatory. You manually tell ESXi which NICs to use regularly, and which NICs to use in the event of a failure.

This is done by creating a list of Active NICs and Standby NICs when you configure your VMware NIC team. ESXi will just use the first NIC in the list. There is no intelligence behind this method, and it does not provide any load balancing, only resiliency.

While it is a very simple method of NIC teaming, it may not fit all situations, or meet all requirements.

This article is part of a series on vSphere Networking. If you are working on a vSphere networking design, be sure to read the some of these other helpful articles:

Making vSphere Networking Design Choices – This article reviews a vSphere networking design methodology.

Introduction to NIC Teaming in VMware vSphere 6.5 Networking – This article reviews why we use NIC teaming, and goes in more detail about what the the methods are.

The Simple Guide to NIC Teaming in VMware vSphere – This article talks about the simplest NIC teaming methods to implement: RouteBased on Originating Virtual Port and Route Based on Physical NIC Load (Load Based Teaming) – You are here!

The Advanced VMware vSphere NIC Teaming Guide – This article discusses more advanced methods of vSphere load balancing, such as Route Based on IP Hash and Route Based on Source MAC Hash.

I hope this was helpful for continuing to understand the importance of NIC teaming in VMware. Remember, VMware NIC teaming does not need to be complicated to achieve load balancing, but the way you achieve load balancing in VMware is impacted by what level of vSphere licensing you have. Route based on originating virtual port and route based on physical NIC load (also called Load Based Teaming or VMware LBT) are both effective methods of NIC teaming.

Melissa is an Independent Technology Analyst & Content Creator, focused on IT infrastructure and information security. She is a VMware Certified Design Expert (VCDX-236) and has spent her career focused on the full IT infrastructure stack.