One of the projects discussed at the Kilo OpenStack design summit in Paris earlier this month is Ironic. Ironic is a program that seeks to deploy instances directly onto hardware, instead of as virtual machines. When we think of OpenStack, we tend to think of abstracting all the things, and the evolution of the hypervisor into a cloud computing environment. Earlier this year, Rackspace began offering OnMetal cloud services, or the ability to deploy OpenStack compute instances directly onto hardware. Let’s take a deeper look at the Irony of OpenStack Ironic.

A Traffic Jam When You’re Already Late

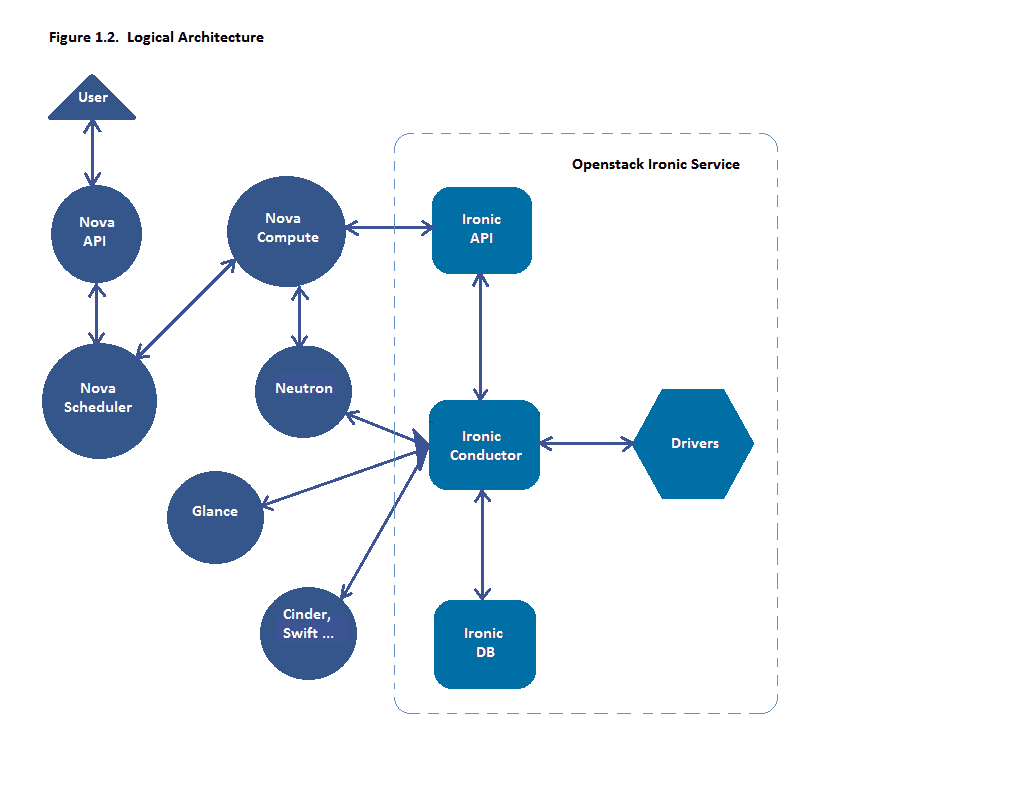

Ironic, like other OpenStack programs, consists of multiple services, including a dedicated API and a database. Ironic has another component that most projects don’t have, and that’s the Ironic conductor. The Ironic conductor is what does the heavy lifting. It is responsible for the powering on and off of hardware, and the actual provisioning and decommissioning of instances onto the hardware. Ironic also has some other dependancies, such as PXE, DHCP, NPB, TFTP, and IMPI, which reminds me a little bit of VMware’s Auto Deploy. Instead of deploying a hypervisor, we’re deploying an instance. An added layer of complexity does come with the use of physical hardware, we need to make sure that we have the proper drivers for each type of hardware we plan on deploying bare metal to, something we aren’t used to worrying about as much in the virtualized world.

The use of a hypervisor in conjunction with OpenStack definitely has it’s advantages. Hypervisor features like high availability and live migration of instances make an environment more resilient and flexible for sure, but hypervisors aren’t without cost. There are fees for licensing of support and use of this software, but what if I don’t really need the features it provides? What if I’m deploying an application with built in resiliency at the compute layer? One of the great things about OpenStack is the abstraction of data from the instance. Our instances are merely a shell, other services are storing our data, for example, we have Cinder and Swift volumes connected to our instances, and they aren’t impacted by an instance failure. Perhaps I have a couple of different applications that I don’t necessarily need to use all of the time, but I need them once or twice a month each. I could buy a rack of hardware, 1U servers to meet the specs, and use something like OpenStack Ironic to deploy instances directly onto bare metal when I need them, and blow it all away and deploy another instance when I don’t need it any more. In the event of a failure, who really cares about my instance? As long as my application will keep running, I can deploy a new instance and connect the Cinder and Swift volumes I need.

Behind the Bare Metal

The case could be made for deploying large amounts of cheap commodity servers in an environment, instead of things we may consider hypervisor class servers. For example, let’s say we have a bunch of 1U dual socket servers with 32GB of RAM and some 10 GigE because it was on sale that week. Due to the low amount of memory, it may not even make sense to run a hypervisor on it, since we’d just have a horrible consolidation ratio anyway, but we could us something like OpenStack Ironic to run instances directly on them. There also may be a use case when we have an instance that is large, and brushing up against the specifications we use for our hypervisor class servers. We may also have a customer that requires their own dedicated hardware infrastructure to to regulatory or other requirements. This would allow them to still manage their physical assets just like they would their virtual ones in the case of OpenStack, removing the need for siloed infrastructures to support physical hardware.

I believe in virtualization first, but I can see how Ironic makes sense. As we move more way from the notion of a server being the most important factor (whether it be physical or virtual), and more towards the notion that we’re concerned with the data inside the instance instead of the instance itself, Ironic may make sense for some use cases. While we won’t be running a traditional hypervisor on our bare metal, we’re still going to be managing these instances as we would their virtual counterparts, and leveraging all of the orchestration tools such as Heat that we would with our virtual instances. Perhaps we could even use Heat to trigger Ironic to deploy OpenStack service endpoints onto bare metal. That really would be, well, Ironic.

Song of the Day – Ella Henderson – Ghost

Melissa is an Independent Technology Analyst & Content Creator, focused on IT infrastructure and information security. She is a VMware Certified Design Expert (VCDX-236) and has spent her career focused on the full IT infrastructure stack.

Recap #vDM30in30 – The Really, Really Long List, Enjoy! @ Virtual Design Master

Friday 28th of November 2014

[…] The Irony of OpenStack Ironic, Bare Metal for the Cloud […]