If you haven’t heard the letters AI recently, you probably aren’t even reading this. AI is everywhere we look, even beyond the technology industry. Regular people are using ChatGPT in their daily life, and there are AI commercials on TV. In the technology world every vender boasts some sort of AI feature in their product, because, well, they simply have to in order to be relevant.

One thing that is often missed is that AI has to live someplace, it has to run someplace, and that place is a data center. Even if we are talking about AI powered by a hyperscale provider like Microsoft Azure, Amazon Web Services, or Google Cloud, the cloud is just someone else’s data center.

Pair that with the trend of people trending back to their own data centers, and it is time to have a very interesting discussion.

Designing AI Solutions with Cisco UCS X-Series

While I’ve certainly used multiple vendors when it comes to designing AI ready private cloud solutions, as we all know, Cisco UCS is one of my favorite platforms to design with, and there really are some unique things to think about when using the Cisco UCS X-Series.

The Cisco UCS X-Series is Cisco’s “blade” server platform. I use the term blade lightly, because there has been a shift to talking more about the X-Series’ modular architecture in recent years over its form factor.

In any event, there are some important things to consider when designing an AI solution powered by UCS X-Series. Why? It all comes down to the difference between an AI ready infrastructure solution and a more traditional one. GPUs.

Cisco UCS X-Series and GPUs

GPUs are essential to any AI based architecture. The number one complaint about blade servers is usually their form factor in the sense that you simply can’t fit enough in them, and this of course is a huge consideration when we’re talking about AI.

Let’s take the UCS X210c M8 compute node (this one is powered by Intel while the Cisco UCS X215c is powered by AMD).

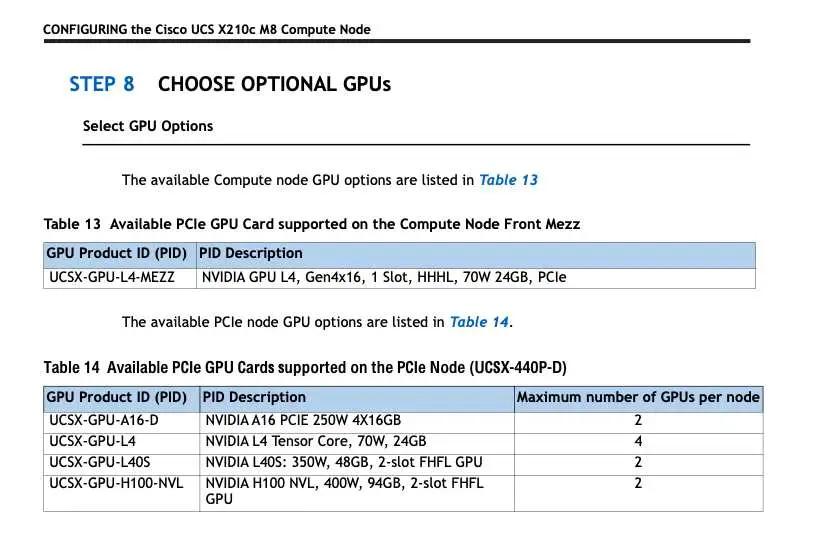

The front mezzanine slot can support two HHHL (half height half length) GPUs. If we go to the UCSX210c spec sheet, we can see we’re pretty limited, but wait, what’s this PCIe Node? Because there are quite a few more options there, especially for FHFL (full height full length) GPUs.

(Image from the Cisco UCSX210c Spec Sheet)

The Cisco UCS X440p PCIe Node

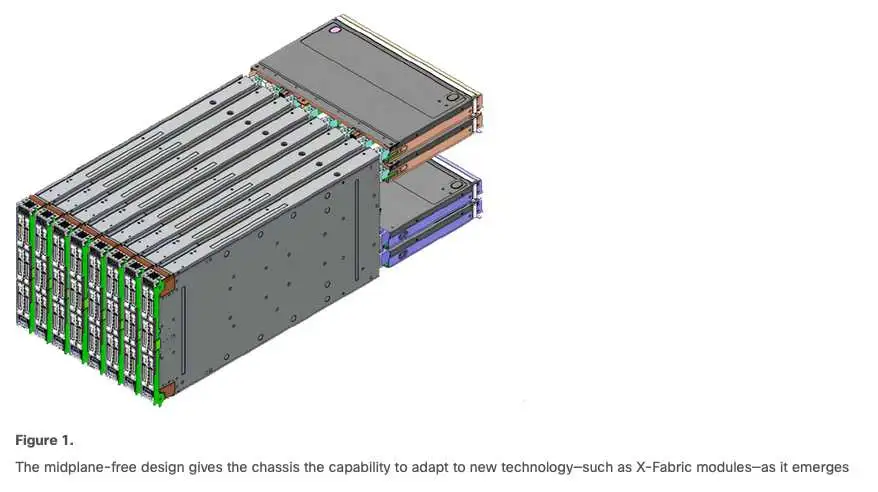

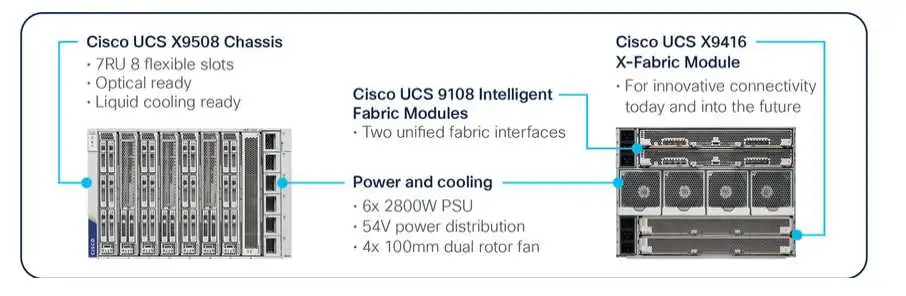

There were a few architectural changes from the Cisco UCS 5108 to the X9508 chassis, and the use of the UCS X440p is what make these changes obvious.

The X9508 has four connectivity slots on the back, two for the UCS 9108 Intelligent Fabric Modules (IFMs) to connect to the Fabric Interconnects, and two for the UCS 9416 X-Fabric Modules.

(Image from X-Series Modular System overview)

If I recall correctly, when the UCS X-Series launched in 2021, there was a lot of discussion of it being a future proof modular platform. While two rear slots were for the IFMs, we talked a lot about the other slots being for “future expansion”.

The X-Fabric modules act as the backbone for these PCIe expansion blades. They allow the X-Series servers to leverage additional GPUs in a manner that is scalable and high performing. Each X9508 can have a maximum of four X440p PCIe nodes, which translates to a maximum of 8 full size GPUs in the 7U form factor chassis.

Well, with the rise of AI, the future is here now, and the Cisco UCS X-Series is a great platform to bring that future to your data center by leveraging the X440p PCIe nodes and your GPU of choice.

But which GPUs do I use?

We’ve talked about the different GPU options for the Cisco UCS X210c specifically, so let’s take a deeper dive into which GPU to use for what purpose. The GPUs you will end up selecting, and how you will implement them tie back to the requirements of the AI system you are designing.

Here’s what we have available for the X440p:

(From the Cisco UCS-X440P spec sheet)

There is some significant thought on what cards will go into your AI infrastructure, but for today’s purpose I want to oversimply things.

When we talk about building an AI system, we are building it to do one of two things, either training or inference.

Training vs. Inference

If we take the example of ChatGPT, training and inference are two different phases in its lifecycle.

Training is building the AI itself, this is where it ingests huge datasets, and how the model “learns”. This is very heavy in terms of the GPUs required and doesn’t happen over night, as we can see by how often new AI models are released. Think massive amounts of GPU and bandwidth to teach the model.

Once a model is trained, well, it’s trained and shipped. The model won’t be trained again, but you can be assured that that massive amount of GPU and computing power is then being used to train the next model.

Inference is simply using ChatGPT. The trained model is loaded into the system, and the GPUs are used to generate the answer from the trained model. In this case, the model is already trained and loaded into GPU memory so the model can be used. Inference happens as long as the model is being used.

If we look at the chart, a quick rule of thumb is that the GPU models you see with 2 maximum per node are for training, and the ones you see with a max of 4 per node are inference.

In this case, let’s take a closer look at the NVIDIA A100 vs. NVIDIA L4:

| Feature | NVIDIA A100 80GB (Training) | NVIDIA L4 (Inference) |

| Form Factor (UCS Part #) | UCSX-GPU-A100-80 / A100-80-D | UCSX-GPU-L4 / L4-D |

| Primary Use Case | Massive model training (LLMs, GPT-4, BERT, DLRM) | Scalable, low-power inference (LLMs, CV, recommendation) |

| GPU Architecture | Ampere | Ada Lovelace |

| Memory | 80GB HBM2e | 24GB GDDR6 |

| FP32 Performance | ~19.5 TFLOPS | ~30 TFLOPS |

| FP16/BF16 Performance | ~312 TFLOPS with sparsity | ~485 TFLOPS with sparsity |

| INT8 Performance (Inference) | ~624 TOPS with sparsity | ~1,082 TOPS with sparsity |

| Tensor Core Support | Yes (3rd Gen) | Yes (4th Gen) |

| Power Consumption | 300W – 400W (configurable) | 72W |

| Cooling | Passive & active options | Low-power, passive |

| Multi-Instance GPU (MIG) | Yes (up to 7 instances) | No |

| Interconnect Support | NVLink, PCIe Gen4 | PCIe Gen4 |

| Typical UCS Blade Use | X-Series or C-Series for AI clusters | Dense inference on UCS X210c or C220/C240 |

| Virtualization/VDI Ready | Yes (vGPU, MIG) | Yes (vGPU), optimized for multi-tenant inference |

| Deployment Cost | High (>$10,000 per GPU) | Low-to-mid ($1,500–$3,000 per GPU) |

As you can see, there is a huge difference in these GPUs, and the one you will choose is based on what you are trying to accomplish.

Cisco Design Guides for AI-Ready Infrastructure

It isn’t a secret that I have spent a great deal of time in my career creating, designing, and building Cisco based data center solutions.

The Cisco Validated Design Zone has everything you need to continue to understand what an AI ready infrastructure really looks like, and get started with a Cisco UCS based AI infrastructure solution.

Keep in mind some solutions do use the UCS C-series rack mount servers in some form, which we will be diving deeper into another time.

We’re just scratching the surface of what Cisco UCS and NVIDIA GPUs can do together. In this post, we focused on the X-Series and GPU selection—but stay tuned, because next we’ll be diving into how UCS C-Series servers, NVIDIA AI Enterprise software, and Cisco’s broader AI ecosystem tie everything together into a cohesive, production-ready AI infrastructure. The future of AI is modular, flexible, and already here.

Melissa is an Independent Technology Analyst & Content Creator, focused on IT infrastructure and information security. She is a VMware Certified Design Expert (VCDX-236) and has spent her career focused on the full IT infrastructure stack.