Anyone who has worked with VMware vSphere can tell you that it is pure magic. Deploy a brand new server with a single click while you do something else. Send virtual machines around the world, to the cloud, and back again with the click of a button. Virtually anything you want or need to do in the world of computing is supported by this platform, and easy to operate and manage.

However, this was not always the case. VMware vSphere has come a long way since the founding of the company VMware in 1998. Let’s take a walk through virtual history together, and even dive into the treasure trove of my vSphere archives.

Without further ado, here are 13 things only early VMware vSphere Administrators will remember.

1. Life Before vSphere Clusters

Out of the gate, this is one of the major things us early VMware vSphere administrators remember. Back in my day, when I got started, there was no such thing as a VMware vSphere cluster. This means some of the hallmark VMware vSphere features we rely on today simply did not exist.

Can you imagine a life without vMotion, DRS, or HA? Trust me, it is not pretty. Even when it first came out, these features were not as robust as you are today.

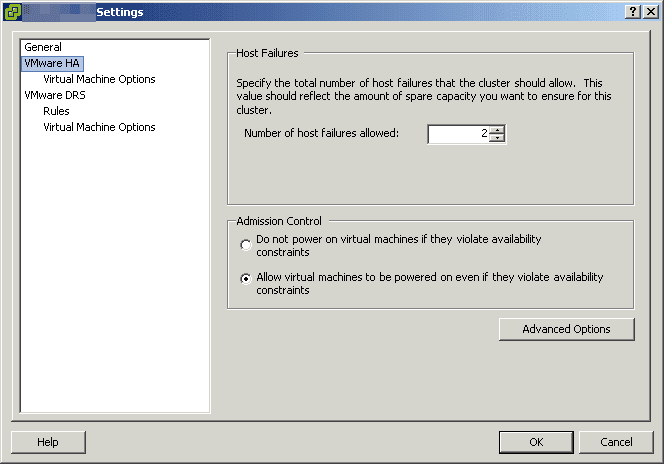

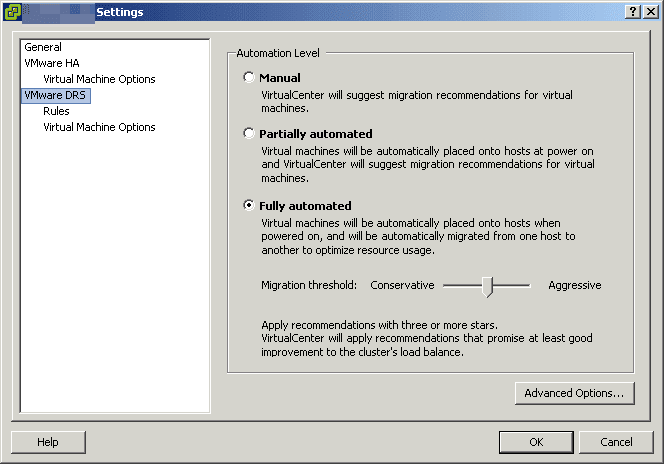

Ever heard the phrase set it and forget it? This was pretty much the case for VMware HA in the early days:

Similarly, DRS also did not have many options:

VMware added these features with the release of Virtual Center 2.0 and ESX 3.0 on June 13, 2006. Be sure to read the release notes for a blast to the past!

2. The ESX Service Console

One of my first blog posts ever was on the VMware ESX service console. Before ESXi, VMware’s hypervisor was called ESX, which included something called the service console.

If you have used the command line to manage VMware vSphere in recent years, some of the functionality is the same. For example, many commands start with the prefix vicfg. Back in the day, the commands started with the esxcfg prefix and were issued via the service console.

However, the service console was much more than just a command line interface. Think of it as a little mini virtual machine that sat on your ESXi host. You could do all sorts of things here, like run scripts and install third party utilities for things like backup. However, this did have potential to wreak havoc in your VMware vSphere environment if you did not know what you are doing.

Eventually, VMware introduced ESXi, and retired ESX with a service console. The final release with a service console was vSphere 4.1, which was offered in both ESX and ESXi versions. During the switchover, things got a little funky. For example, to access a shell on the ESXi host you used to have to type the word “unsupported”. Eventually VMware listen to their customer base, and introduced command line management tools <<?>> as we know them today, like the ESXi shell we all find so useful.

3. VirtualCenter

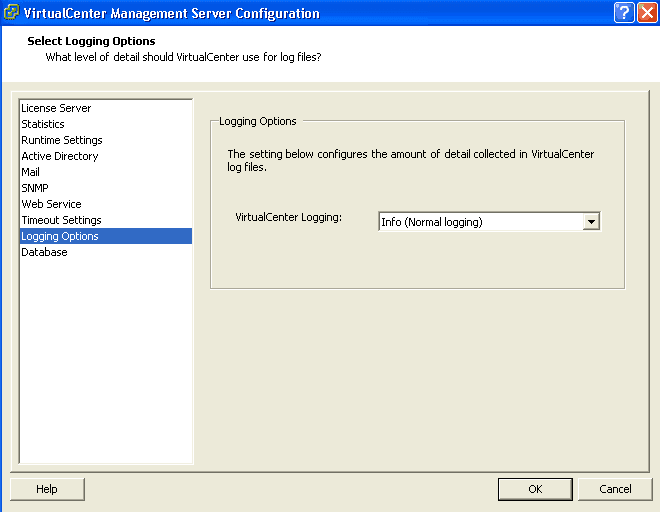

We have already touched on this a little bit when we talked about the introduction of the VMware cluster. vCenter Server as we know it today used to be called VirtualCenter. While that is not too confusing, the version numbers of Virtual Center and ESX never matched – VirtualCenter was always a version behind, such as in the above example about EVC.

This of course, caused some confusion. When VMware changed their branding to vSphere, they also changed the name of VirtualCenter to vCenter Server, and made sure the numbers matched the ESXi release going forward.

This happened with the release of VMware vSphere 4.0. Previously, the product was called VMware Infrastructure 3 or VI3. Sometimes, I still catch myself talking about VirtualCenter.

4. The CPU and Memory Flip Flop

Back in the day, VMware vSphere hosts were super expensive, and it took a lot of careful thought to determine what your requirements were. Many VMware vSphere administrators noticed a pattern, they were either CPU or Memory constrained in their vSphere clusters. The prices of these resources were constantly fluctuating, and it was hard to figure out the right combination.

This led to quite a bit of administrative overhead, as you tried to balance your virtual machines across the clusters you had so you were not wasting any of a particular resource, which was especially hard if you did not have a vSphere cluster! This brings me to my next memory.

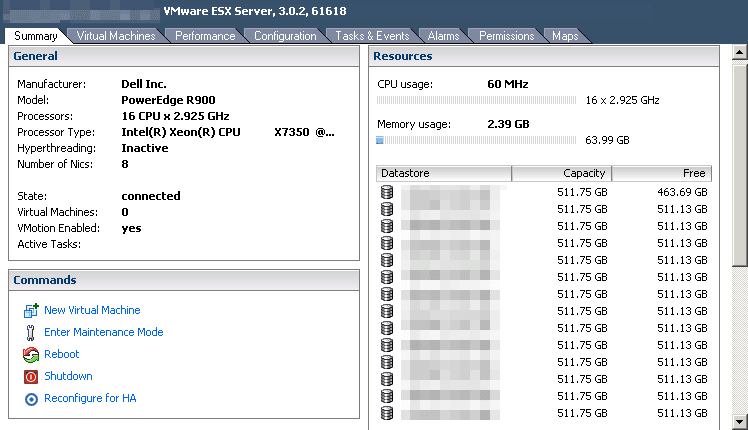

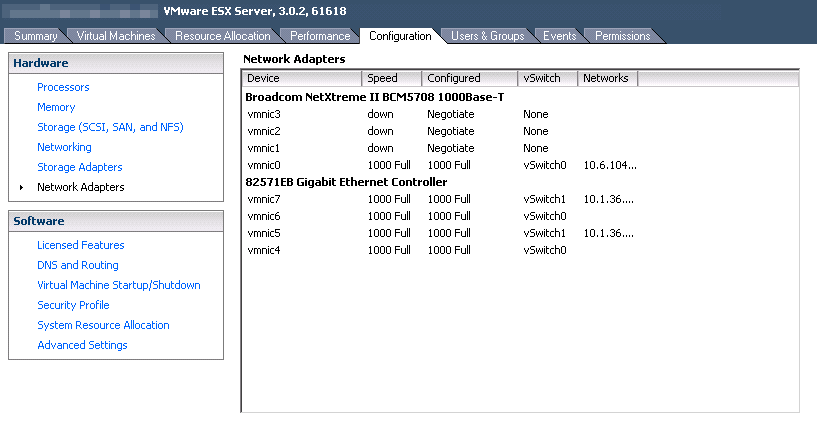

Check out these impressive stats on a ESX 3.0.2 host, believe it or not, this thing was a beast back in the day, and I was super excited to deploy it:

5. There Was No EVC Mode

Speaking of CPU, back in the early days there was no EVC mode. EVC mode, or Enhanced vMotion Capability is what allows you to move virtual machines between different processor families of the same manufacturer non-disruptively.

This will not allow you to move virtual machines between Intel and AMD processors non-disruptively, but it will allow you to move between different generations of Intel processors OR different generations of AMD processors.

EVC works by bringing processors down to the lowest common denominator you select. It was introduced in Virtual Center 2.5 Update 2 / ESX 3.5 Update 2. This is also one of the first things to enable on your vSphere cluster if you plan on using it. In vSphere 6.7, this functionality was greatly enhanced with the introduction of per-VM EVC mode.

6. Storage vMotion Command Line

Another great feature of Virtual Center 2.5 Update 2 / ESX 3.5 Update 2 was Storage vMotion. Upon initial release, it only worked for FC and iSCSI datastores, but no one cared about that since it was so revolutionary. It was a total game changer, and really made VMware ESX storage easier to manage.

While we take this for granted today, and are used to our two clicks to start a Storage vMotion in vCenter, at initial release, storage vMotion was only available through our friend the vSphere Command Line Interface.

There were some wacky vCenter plugins that fixed this until vSphere 4 came out, but the best thing to do was to suck it up and deal. I usually wrote out the command in notepad first to make sure I got it right, because the last thing you wanted to do was to send the virtual machine to the wrong place!

7. vSphere Update Manager Used to Patch Windows (and Linux)

vSphere Update Manager was released way back in the days of VI3, with the Virtual Center 2.5 / ESX 3.5 release. Besides patching ESX, it also had the ability to patch Windows and some Linux systems.

While this was awesome, VMware eventually deprecated this functionality, pretty much just as everyone was adopting it.

8. Death By Backup

Back in the dark ages, we all struggled together when it came to administration and operations of a VMware ESX host, and the virtual machines residing on it. Almost every organization started out by treating virtual machines as they would a physical server, and quickly found out that it did not work well.

The place this was the most obvious was the world of backup. Our ESX hosts would come to a grinding halt when backup time came, and virtual machines would throw errors and miss backups pretty much all of the time.

At that time, most servers, virtual or physical, we backed up by the agent installed on them. This quickly became a horror scene as more and more virtual machines came online. The backup jobs were resource intensive, and crushed the ESX environment. It was not a good situation.

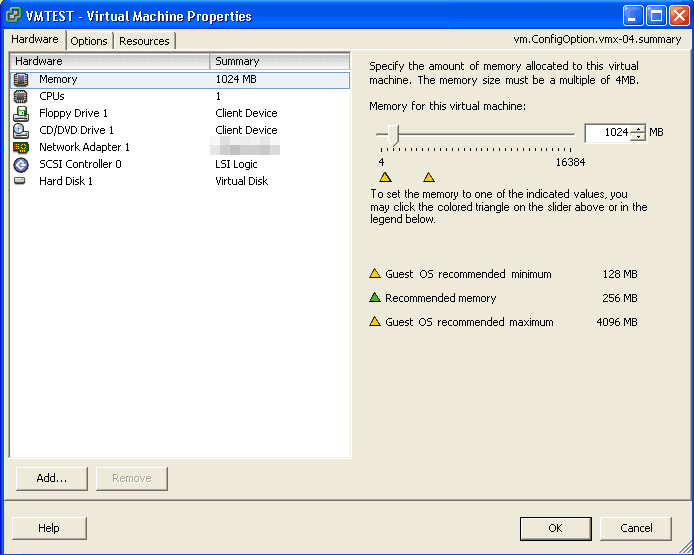

While we are talking about our precious virtual machines, look at all the configuration options we had way back when in VI3:

9. VMware Consolidated Backup (VCB)

Speaking of death by backup, VMware Consolidated Backup, or VCB was VMware’s answer to this problem. It debuted in the ESX 3.0 days, and was meant to help offload the work from ESX servers, while integrating with commonly used backup solutions.

Version 1.0.2 of VCB was released in 2007, and had exciting features like Windows 2003 R2 support for the proxy server. Sadly, VCB never really took off. While VMware is really good at virtualizing things, VCB often caused more headaches than anything.

VMware vSphere versions after vSphere 4.1 no longer supported VCB (or the service console), and it topped out at VCB version 1.5. Add this one to the VMware product graveyard.

10. There Was Never Enough Storage for VMware

When it comes to block based storage, VMware uses a file format called VMFS (Virtual Machine File System). In many cases, a new version of the VMware infrastructure products introduced a new version of VMFS, for good reason.

Back in the days of VMFS-2 and VMFS-3, the largest virtual disk size possible was 2TB (in VMFS-3 it was 2TB-512B if you want to be really specific). The limitation on VMFS-3 was a big deal, since it was the filesystem used both by ESX 3 and ESX 4, which means it was around for a very long time, and present during one of the most rapid phases of VMware’s growth.

Along with this file size limit in VMFS-3 was also a LUN size limit, which could be almost even more problematic. Remember, even if you had a storage array capable of great things like deduplication, at this point in time it was all done at the LUN or Volume level.

Things got better when vSphere 5 came out, along with VMFS-5. While we had the same file size limitation, the volume size increased exponentially to 64TB. With vSphere 5.5, VMFS-5 was enhanced even further and the file size limitation became 62TB.

11. vRAM Licensing

Along with VMFS-5 a new licensing model was introduced with vSphere 5, based on the amount of vRAM in a virtual machine. If you were not working with vSphere when vSphere 5 came out, you probably have not heard of this one.

Simply, put, instead of socket based licenses, VMware was going to base their licensing model on the amount of vRAM configured for each virtual machine. Mass hysteria is an understatement, as this model was not received well, since many were running environments with massive memory overcommitment. The model was quickly revised, before being abandoned the next year.

While it was a bit of a mess for VMware, once again it is a testament on how seriously VMware takes customer feedback, and how well they listen to their customer base. Kudos to them!

12. Virtual Port Limits

I just mentioned overcommitting memory resources. Many times, especially for development and test workloads, administrators will often overcommit vSphere resources in order to run more virtual machines, especially when performance is not most important quality of the environment.

During the reign of our good friend VI3, there was a limit of 1016 ports per virtual switch. Which is not completely horrible, though some hit that limit easily. What is worth though, is that the default number of virtual switch ports was 56, which took no time at all to hit.

If you did not remember to increase the number of virtual ports during the build process, you were in for a nasty surprise later when you ran out of switch ports.

Also, back in the day, if you were lucky, you had a bunch of 1 gig NICs to tie together to make things work. The worst was trying to get everything working with only 2 little NICs! Be sure to start with my Introduction to VMware NIC Teaming and read the series to learn more about how it is done!

13. Virtual Desktop Manager

Did you know 2007 was the original year of VDI? VMware’s first VDI product, Virtual Desktop Manager also known as VDM made its debut that year. I was on the cutting edge during the fist year of VDI and had it running in production. For fun, you can find the VDM 2 release notes here.

The problem back then, was hands down, how much it cost to host desktops on your VMware infrastructure. Remember, hosts had less resources and were way more expensive than they are today, and the ultimate killer was the price of the ESX host’s storage. While virtual desktops brought a huge number of benefits, organizations just were not biting on them in the early days due to cost. My deployment was very small for a very particular use case, it was not something that everyone was going to have.

Eventually, this product would be known as View, then Horizon as we know it today.

Your VMware vSphere History Lesson

I hope you enjoyed your VMware vSphere history lesson. I have been working with VMware products for quite some time now, my first version of ESX was 2.0. I really cut my teeth on ESX 2.5.4 and ESX 3.0.2, after that, I pretty much knew what I was doing when it came to ESX. Quite a bit has changed from ESX 2.0 to vSphere 6.7 U2, and I am looking forward to seeing what comes next in the progression of VMware vSphere.

Melissa is an Independent Technology Analyst & Content Creator, focused on IT infrastructure and information security. She is a VMware Certified Design Expert (VCDX-236) and has spent her career focused on the full IT infrastructure stack.