I happen to have a background in both VMware vSphere design and disaster recovery, however these two things are not often talked about in tandem. In this guide, I’m going to walk you through some of the most important considerations when it comes to designing and building a vSphere based disaster recovery environment. I’ll also provide some insight on designing creative solutions that will meet a wide variety of RTOs and RPOs by means of a fictional case study.

We’ll be focusing more logically on how to design vSphere for disaster recovery, you’ll see terms like backup and replication, but we won’t get into specific vendors or products.

Before we get started on this design case study, let’s set the stage a little bit more.

Why DR Gets Ignored

Disaster recovery is usually one of those things we like to push to the side. After all, it can be a lot of work, and well, will you ever really use it?

Disaster Recovery and Business Continuity planning often sits with the information security organization. While in the past, it was easy to sign off on the risk of a traditional DR event happening and simply accept it, the rise of ransomware has changed that paradigm.

In today’s environment, the answer is yes. Unfortunately, it isn’t if you are hit by ransomware, it is when you are hit by ransomware. This coupled with the threats we are used to means disaster recovery isn’t something we can just ignore.

When it comes to upgrading or even putting together your first disaster recovery site, the tendency may be to take a few short cuts, after all, it is just going to sit there, isn’t it?

We’re going to walk through some of the key ideas you should keep in mid during this process of designing a vSphere based disaster recovery environment.

Introducing Majestic Laboratories

Through this guide, we’re going to reference a fictional company called Majestic Laboratories. Majestic Laboratories is a research-based company that specializes in boutique microchip design and manufacturing. The space they operate in is highly competitive. Majestic Laboratories in in the process of re-architecting both their data protection environment as well as their VMware vSphere based disaster recovery environment.

We’re going to use this example to provide some practical design ideas.

I’ll talk about what I think about when designing a solution to meet each required RTO and RPO in a general sense, then I’ll provide ideas on how to meet these requirements. There are many ways to meet requirements beyond what is in this document.

These ideas are just that, ideas. They are not meant to be implemented in a production environment as exactly stated here. Before implementing a disaster recovery environment, it is important to collect requirements and analyze them.

Majestic Laboratories Design Methodology

The Majestic Laboratories design methodology is based on flexibility, and multiple ways to achieve the same RPO and RTO outcomes. The first part of the activity will be verifying the application RPOs and RTOs with business stakeholders.

Next, will be a sizing activity to determine the resources they need to recover.

Once they determine the required resources for their recovery, they will be purchasing and deploying additional VMware vSphere clusters to meet this requirement into their second data center.

The design decisions during this project are being driven by maximizing the current hardware and software they have while ensuring they can remain flexible and competitive as the landscape they operate in changes.

A Brief Introduction to Business Impact Analysis (BIA)

Before you can properly design and deploy a DR environment, you need to understand what it is you are recovering and the associated requirements.

The business impact analysis is a crucial step when it comes to creating a DR plan, and as such, designing a DR site.

You need to understand each application’s Recovery Point Objective, Recovery Time Objective, and Service Level Agreement so you can properly plan two main things:

- How you are going to protect them (RPO)

- How you are going to recover them (RTO)

Understanding the applications you have, and their associated requirements are the first step in designing your DR environment.

Availability and SLA

Some areas that can get confusing in the context of disaster recovery are availability and SLA and understanding how they relate to each other.

Availability is measured in “nines”. I’m sure you have heard terms along the lines of “three nines” and “five nines”. These nines translate into how much unplanned downtime is allowed in the environment. One great site to look at is uptime.is, which will tell you what these SLA numbers translate to.

People love to throw around terms like Availability, SLA, RTO and RPO, but don’t often stop to think about how they relate, and how they can impact each other.

For example, 99.9% or three nines translates to 8 hours 45 minutes and 56 seconds of unplanned downtime per year, and 99.999% or five nines translates to 5 minutes and 15 seconds of unplanned downtime per year.

Now let us put that into perspective. The way solutions are designed based on meeting these numbers is very different and has various levels of cost associated with them.

Tying this back to our friends RPO and RTO – these numbers must fall within our SLA! If our RPO or RTO exceeds our SLA, well we have missed the boat on meeting those required numbers.

vSphere Sizing for Disaster Recovery

When it comes to vSphere resources, I always size for peak loads in disaster recovery. Not everyone agrees with this, but there is one critical thing to remember in the event of a disaster: your disaster recovery site is now your production site.

I can’t tell you how many times I have seen people try to save money by putting huge SATA drives into disk arrays for VMware at the DR site. They banked on the fact that they would never use it, or that they could “deal with it” if they had to.

Or even worse, they would use their server hardware that was out of support for DR, since they probably wouldn’t ever need it.

This is a fundamentally flawed approach to disaster recovery that can put a company out of business. While it may check the boxes and please the auditors, if you can’t actually run your production workloads you might as well not even bothered recovering them in the first place.

The trick is to use these resources for more than just disaster recovery, to prove their value and help you getting the investment.

Back to sizing. Be sure to size for peak workloads, and keep a growth plan in mind. Are you sizing for 3 years up front? Or do you plan to add capacity to the environment on a regular basis? These are questions you should ask yourself at the beginning of your sizing activity.

Choosing How to Protect Data

Now that we know our capacities and workloads, we need to go a step further and decide how to protect them. How we protect them ties back to our required RPO.

This is where choosing a data protection solution becomes extremely important, and some softer aspects of disaster recovery planning come into play.

Something we don’t often think about are the people. The people who will backup and restore these systems need to be well versed in the data protection solution. If you chose to leverage multiple solutions, they must be trained in all of them to be able to operate them properly. While using multiple solutions may be a way to meet your RPOs and RTOs, there is a cost to your teams for operating a multi-platform environment.

Next is the hardware and software requirements of the solution, and of course how much it costs. You’ll need to run the data protection solution someplace and ensure it meets the requirements to provide your desired RPOs and RTOs.

Choosing How to Recover Data

There are always multiple ways to recover something, but first and foremost you need to be able to meet your RTO. A recovery for a single server is also going to look very different than recovery of an application with 100 servers in it.

If we’re talking about a disaster, recovery is arguably one of the most important things to keep in mind. A natural disaster or a ransomware attack won’t just impact a server or an application, we may need to recover our whole environment. This is where automation and orchestration come into play. The risk of having your poor backup administrator manually recover 1,000 servers is just too great, and you’ll never meet all of your RTOs.

Disaster recovery tests (if you actually preform them) will quickly show where everything breaks, again, if you actually test as if it were a disaster. While restoring just a few servers may check the boxes, it won’t accurately tell you what breaks at scale.

How Majestic Labs Protects Different Tiers of Data

Now, let’s break down a few different ways that Majestic Labs can protect their tiers of data. Here are the results of the Business Impact Analysis (BIA). Majestic Labs has 1,000 servers.

| Tier | % | Servers | RPO | RTO |

|---|---|---|---|---|

| Tier 1 | 5% | 50 | 15 sec – 5 min | 1 h |

| Tier 2 | 25% | 250 | 30 min | 1 h |

| Tier 3 | 35% | 350 | 12 h | 12 h |

| Tier 4 | 35% | 350 | 24 h | 1 w |

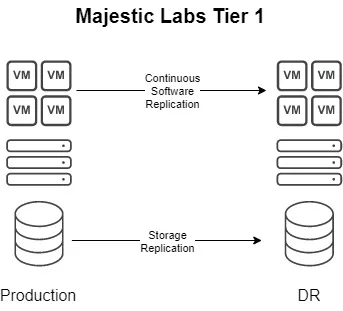

Protecting & Recovering Majestic Laboratories’ Tier 1 Data

In Tier 1 we have RPOs ranging from 15 seconds to 5 minutes, and the RTO for all Tier 1 apps is 1 hour, and a total of 50 servers.

While there is a bit of a range in RPO when it comes to Tier 1, they are all extremely low. There are a number of ways to accomplish this, of course, such as using a storage array with synchronous storage replication. The main drawback of synchronous storage replication is you need to have storage arrays of the same vendor in each location, and some vendors have pretty stringent requirements for synchronous replication.

Another way to accomplish this is using replication software, which removes the requirement for like storage arrays and is generally more flexible.

The biggest issue when hitting a low RPO is usually network bandwidth, which we will need to account for in our vSphere design. We also need to understand the change rate of these applications, which of course we did during our sizing activity.

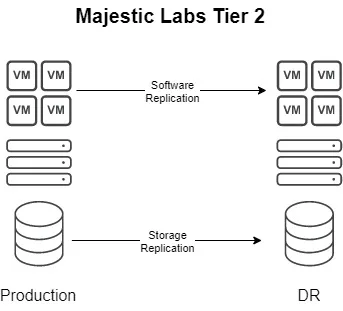

Protecting & Recovering Majestic Laboratories’ Tier 2 Data

In Tier 2, we have an RPO of 30 minutes, an RTO of 1 hour, and 250 servers in total making up a number of applications.

Once we start measuring RPOs and RTOs in hours, we have many more options. A 1 hour RPO is easily achievable by asynchronous storage replication, or software replication. At Majestic Laboratories, we have a combination of various storage arrays we use for our VMware environment, some of which are capable of asynchronous replication.

However, we aren’t sure what storage arrays we may purchase in the future, which is why we are also choosing to also implement software based replication.

For 100 of the 250 severs, we are going to configure software based replication.

For the remaining 150 of the servers, we are going to use asynchronous storage replication.

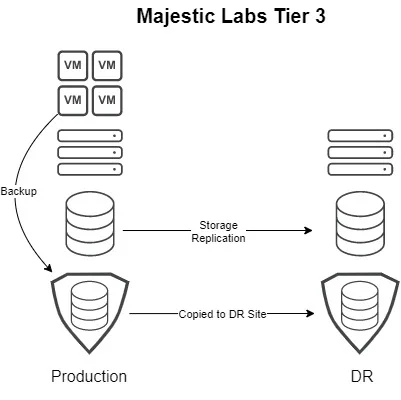

Protecting & Recovering Majestic Laboratories’ Tier 3 Data

Tiers 3 has 350 VMs, an RPO of 12 hours, and a RTO of 12 hours.

Since our RPO and RTO have increased with Tier 3, we have a number of options available to us.

Once again, we will take a two pronged approach.

We will leverage a combination of asynchronous storage replication, and backups. For the time being, some of these VMs are on arrays capable of asynchronous replication, so we will leverage it.

100 VMs will have backups powered by storage snapshots since the array they are running on supports it.

For the other 250 VMs, we will run a backup job every 8 hours, and send a copy of that backup to our DR site.

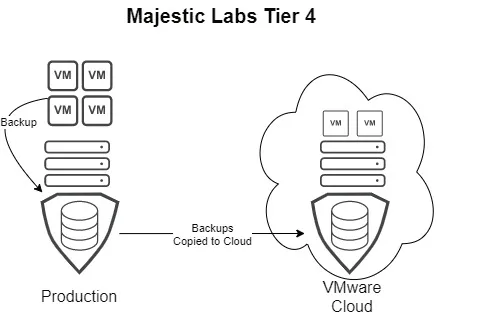

Protecting & Recovering Majestic Laboratories’ Tier 4 Data

Tier 4 consists of 350 servers, with a RPO of 24 hours, and a RTO of 1 week. Majestic Laboratories is going to try something radically different with Tier 4 recovery as part of this project.

Majestic Laboratories has been examining VMware Cloud Solutions for some time, and is going to choose one for their Tier 4 recovery.

Majestic Laboratories will maintain a minimal environment in the chosen VMware Cloud solution with the services they need to support their recover, such as AD and DNS.

Should a disaster happen, and is deemed to be a prolonged outage, Majestic Laboratories will scale their VMware Cloud deployment with the required capacity, and begin orchestrated recovery from backups, which will be waiting for them in their cloud of choice.

Since the RTO for Tier 4 is 7 days, Majestic Laboratories may not immediately begin to restore these applications if the outage is not expected to be prolonged.

These VMs will be protected by backup, and then a copy of the backup will be sent to object storage in the VMware cloud solution they have chosen.

Verifying RPOs and RTOs

Once we have the plan in place and all VMs are protected, it is important for Majestic Labs to constantly verify their RPOs and RTOs to ensure they can meet them at a moment’s notice.

RPO verification is the easier part. We need to be monitoring our data protection jobs and storage replication to make sure all jobs are running and finishing as expected.

RTO verification can get a bit trickier. We need to do real world disaster recovery testing on the Majestic Labs systems. The key to these disaster recovery tests will be automation to ensure we can run them on an ongoing basis, and find potential recovery issues so we will meet our RTOs.

Planning Your Disaster Recovery Plan

If you haven’t already implanted a disaster recovery plan in your environment, or you have one you haven’t quite tested properly, now is the time to act.

We outlined the key activities in this guide, but it can be a bit overwhelming if you’re just getting started.

Here are some final words of advice.

Start with Your Applications

Look, I’m an infrastructure person through and through. However, when it comes to properly designing an infrastructure solution, especially for DR, we really need to start with the applications.

We need that solid Business Impact Analysis. We need to know what our RPO and RTO are for each and every application, and sometimes we need to dig deeper than that. It’s a cross functional discussion that has to happen between many stakeholders, and can’t be decided in a vacuum.

We need to make sure we’re making data driven decisions when we say what our RPOs and RTOs are, and many times they come back to cost, which can even be hard to quantify these days.

How much money will we lose if this application is down? Will this application being down hurt our reputation as a company? Will our competitors use it against us? These are all things to consider beyond how much that backup repository is going to cost.

Assess Your Current Infrastructure and Get Creative

The next step is to assess what infrastructure you currently have and any gaps you have in recovery capabilities.

I’ve been in this situation a few times where we know we have a recovery gap, but it wasn’t as simple of just fixing it and immediately getting new hardware. We had an active/active approach, and there was a period of time where only one site would have new hardware.

As we all know, projects take time. The risk of trying to simultaneously deploy two sites of vSphere was too much, so they decided on this approach. Honestly, I wish VMware cloud had been an option a decade ago, but it simply did not exist yet.

For example, after meeting with key stakeholders we decided we would accept the risk of not being able to recover tier 3 and 4 during a disaster, and what’s more, accept that we would POWER OFF production tier 3 and 4 in our recovery site (Site 2), so we had the capacity to recover all of Tier 1 and Tier 2 from Site 1.

Track RPOs and Test, Test, Test

The last piece of advice is watch those RTOs and RPOs. Track your RPOs from the time you configure your first data protection job. As you add to your infrastructure, you want to make sure you can constantly meet those numbers. Things may change as you protect more workloads.

Similarly, test, then test some more. While you may meet an RTO of a single application, you may not be able to meet the RTO of multiple applications at once! Real world disaster recovery testing is key, and must be automated.

Designing for Disaster

I hope you now have more insight when it comes to vSphere disaster recovery planning and design. Please feel free to ping me on Twitter if you have any questions or if you’d like something else added to this post.

Melissa is an Independent Technology Analyst & Content Creator, focused on IT infrastructure and information security. She is a VMware Certified Design Expert (VCDX-236) and has spent her career focused on the full IT infrastructure stack.