During the rise of AI, NVIDIA has become almost a household name. Even those outside of tech have heard of the company at this point. Until recently, NVIDIA was best known for graphics cards, but those same GPUs have taken on a whole new life in the age of AI.

The truth is, NVIDIA is far more than just the card that goes into a gaming computer. AI absolutely needs Graphics Processing Units (GPUs) to work its magic, but NVIDIA’s reach goes much deeper than that.

The world’s most advanced AI models don’t just run on GPUs; they run on systems purpose built for AI at scale. That’s the promise of NVIDIA DGX.

As generative AI tools like ChatGPT and Grok have moved from experimental stages into everyday life, enterprises have found themselves in need of infrastructure built specifically for these workloads. Remember: large language models (LLMs) must be trained, and that training requires massive amounts of compute power.

What Is NVIDIA DGX?

DGX systems deliver the foundation for AI computing. They’re designed specifically for AI, combining high performance hardware, software, and networking into a turnkey platform for scaling deep learning, machine learning, and generative AI workloads.

NVIDIA DGX is a fully integrated AI system built, validated, and supported directly by NVIDIA. Each DGX node is engineered for maximum performance, reliability, and scalability. DGX systems combine NVIDIA Tensor Core GPUs, NVLink and NVSwitch interconnects, optimized CPUs, and high speed networking. This deep integration allows organizations to train and deploy AI models faster and more efficiently than traditional infrastructure.

Current models include the DGX B300, DGX B200, and DGX H200 systems. The B300 and B200 are based on the Blackwell architecture, while the H200 is based on the Hopper architecture. You may also see DGX A100 and DGX H100 systems referenced in NVIDIA literature; those are previous generation models.

A DGX is far more than just a server. It powers the massive compute systems that enable AI training and inference at scale. To really understand DGX, we have to look beyond a single node.

Architecture Overview

When it comes to the enormous compute demands of AI, organizations naturally wonder how to piece everything together. NVIDIA has done the hard work by creating reference architectures for deploying DGX systems.

These reference architectures include:

- NVIDIA DGX Systems

- NVIDIA Networking (for compute, storage, and management)

- Partner Storage

- NVIDIA Software (Base Command, NGC, AI Enterprise)

You’ll often see older DGX generations in these reference architectures. Having written reference architectures myself, I can tell you that these massive engineering efforts take time to update. They remain highly relevant even when they reference prior generation systems.

Let’s break down the components.

NVIDIA DGX Systems

At the heart of every reference architecture sits the DGX node itself, an 8 GPU powerhouse engineered for AI at scale. The latest DGX B300 packs eight Blackwell Ultra GPUs. Earlier DGX B200 and DGX H100/H200 systems remain production staples, while the DGX A100 continues to appear in legacy blueprints.

See: NVIDIA DGX Platform

NVIDIA Networking

DGX clusters live or die by interconnect latency and bandwidth. Expect to see NVIDIA’s NDR InfiniBand and Spectrum X Ethernet fabrics deliver 400 Gbps per port with latency measured in ns (nanoseconds).

Most NVIDIA reference architectures will use four fabrics: compute, storage, in band management, and out of band management.

See: NVIDIA Quantium InfiniBand Switches

Partner Storage

All of this compute power is useless without storage fast enough to keep up with it. While the DGX architectures specify many things, storage is not one of them. While the architectures specific storage requirements in terms of GBps required, the actual storage devices will need to be procured from one of NVIDIA’s storage partners.

Stay tuned for a more in-depth look at NVIDIA DGX storage.

NVIDIA Software (Base Command, NGC, AI Enterprise)

The software stack is what turns metal into production AI. NVIDIA Base Command Manager orchestrates multi node jobs, SLURM integration, and health monitoring from a single pane.

NGC hosts thousands of GPU optimized containers, everything from PyTorch 2.5 to NeMo Megatron, all pre built and security scanned.

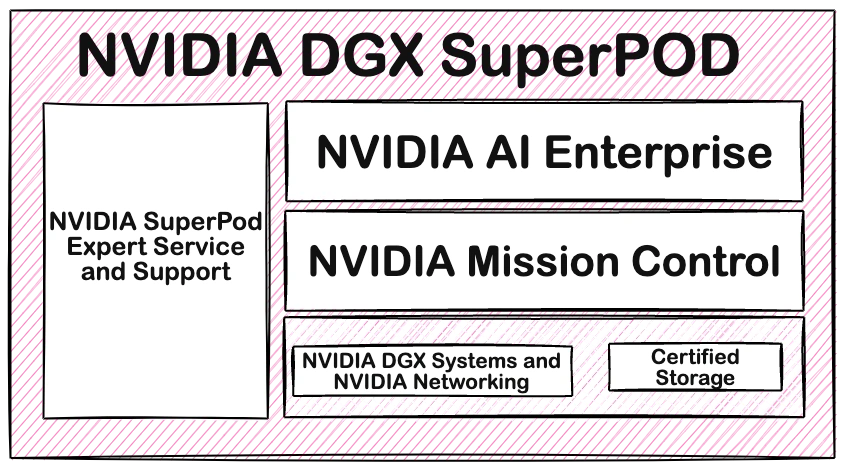

NVIDIA AI Enterprise adds enterprise grade support, Triton Inference Server, and RAPIDS for data science. A single AI Enterprise license covers the entire SuperPOD.

See: NVIDIA AI Enterprise

DGX Reference Architectures: DGX BasePOD and DGX SuperPOD

With the DGX node defined, these reference architectures treat it as a standardized, repeatable unit. Whether deploying a 4 node BasePOD or a 1024 GPU SuperPOD, every DGX B300, B200, or H100 arrives fully validated with the same firmware, BIOS, and NVLink topology.

The BasePOD architecture begins at 2 nodes and scales to 40, while the SuperPOD architecture starts at 32 nodes and scales into the thousands.

For the DGX B300 architecture, SuperPODs are deployed in groups of scalable units (SUs), each containing 64 nodes. NVIDIA’s certification ensures any DGX system slots seamlessly into BasePOD or SuperPOD blueprints without modification. The DGX SuperPOD architectures have been recently updated with the release of the B300.

See: NVIDIA SuperPOD DGX B300 Systems, Spectrum-4 Ethernet and DC Busbar Power Reference Architecture

When we look at the DGX storage components, there are different options available for DGX BasePod and DGX SuperPod Storage

| BasePod Storage Vendors | SuperPod Storage Vendors |

| DDN | DDN |

| Dell | Dell |

| Hewlett Packard Enterprise | IBM |

| Hitachi Vantara | NetApp |

| IBM | PureStorage |

| NetApp | VAST |

| Pure Storage | WEKA |

| VAST | |

| WEKA |

Storage is one of those infrastructure areas I know way too much about, so stay tuned for detailed guides on picking the right storage for your BasePOD or SuperPOD architecture.

DGX Rack Scale – DGX GB300 NVL72

Now let’s talk DGX at a different scale. The DGX GB300 NVL72 is a rack scale DGX system. The rack is fully liquid cooled and integrates 72 Blackwell Ultra GPUs and 36 NVIDIA Grace GPUs.

This means the 72 Blackwell Ultra GPUs form a single NVLink domain, and act as a gigantic rack size GPU. Think huge frontier models with tens of thousands of parameters.

This level of system also requires more extensive data center facilities consideration, as it draws around 150kW per rack

Conclusion

NVIDIA DGX represents the gold standard for production AI infrastructure. From individual nodes to exascale AI factories, DGX provides the proven foundation for training and deploying the world’s most advanced AI models.

NVIDIA DGX Systems come in multiple form factors, from DGX Systems that contain 8 GPUs and consume 8 RUs and are clustered, to DGX Rack Scale systems with 72 GPUs. The flexibility of the DGX platform allows a solution for every organization, from those starting with in house RAG and inference to those AI giants building the future of foundational models.

Melissa is an Independent Technology Analyst & Content Creator, focused on IT infrastructure and information security. She is a VMware Certified Design Expert (VCDX-236) and has spent her career focused on the full IT infrastructure stack.