If you are talking about a vSphere host, you may see or hear people refer to them as ESXi, or sometimes ESX. No, someone didn’t just drop the i, there was a previous version of the vSphere Hypervisor called ESX. You may also hear ESX referred to as ESX classic or ESX full form.

Today I want to take a look at ESX vs ESXi and see what the difference is between them. More importantly, I want to look at some of the reasons VMware changed the vSphere hypervisor architecture beginning in 2009.

What is ESX? (Elastic Sky X)

Wondering what is ESX? ESX stands for Elastic Sky X. ESX is what VMware’s bare metal hypervisor we all know and love was originally called. It really is where virtualization got started.

For today’s VMware history lesson, we are going to start with ESX. ESX was what VMware’s bare metal hypervisor was originally called. While the functionality of today’s ESXi hosts is very similar (though much more advanced) to ESX, there are some important architectural difference.

The main difference was the ESX service console. In fact, my first ever blog post was dedicated to the ESX service console.

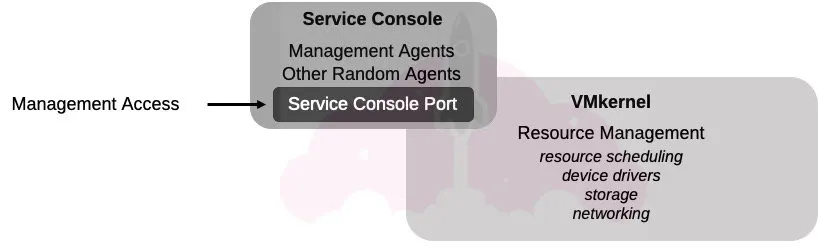

Think of the service console as a small virtual machine that ran next to your guest VMs and provided management access to the ESX host. The service console allowed you to login to ESX and issue esxcfg commands at a command line to configure your host.

Here is a very simple depiction of what it looked like:

Besides accessing the command line, you could download and instal almost any agent you wanted into the ESX service console, like agents for hardware monitoring, backup, or well, anything you wanted really.

The ESX management agents also lived here in the service console.

The service console talked to the VMkernel, which is basically the brains of your ESX or ESXi host.

Before we talk more about the VMkernel, let’s take a look at what changed with ESXi.

What is ESXi? (ESX integrated)

Wondering what is ESXi? It is the next generation of VMware’s ESX hypervisor. ESXi stands for ESX integrated.

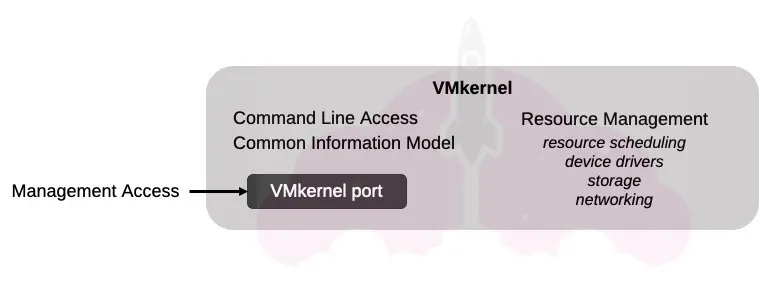

When ESXi was created, VMware integrated the service console functionality into the VMkernel, like this: Again, this is a very simple diagram but you can see what the major changes were, the biggest which is eliminating the service console completely from the ESXi architecture.

Again, this is a very simple diagram but you can see what the major changes were, the biggest which is eliminating the service console completely from the ESXi architecture.

As much as I resisted this change at the time, it made sense for a number of reasons. Remember, ESXi came out with vSphere 4 in 2009, which was the boom of virtualization. Everyone was virtualizing everything in site, and running their mission critical workloads on ESX.

Here are a couple of reasons that VMware may have seen it fit to make this change.

Performance and Stability for VMware ESXi

While the service console could only use up to 800 MB of RAM (which could be significant believe it or not in some of the hosts of the 2009 era), it still could wreak havoc with performance and stability.

Remember those third party agents we talked about? Well in this case, a bad agent could bring you ESX host to a screeching halt, which was not a good thing.

VMware’s Hypervisor Security

The reason it was so easy to develop and install agents on the service console was because the service console was basically a linux VM sitting on your ESX host with access to the VMkernel.

This means the service console had to be patched just like any other Linux OS, and was susceptible to anything a Linux server was.

See a problem with that and running mission critical workloads? Absolutely.

By getting rid of this “management VM”, VMware was able to greatly reduce the attack surface of their hypervisor, which was becoming increasingly important as adoption grew so rapidly.

Simplification of Virtualization Management

By integrating these management functions into the VMkernel, the ESXi architecture became much simpler than ESX. As anyone who has ever architected anything can tell you, the simpler the better.

For example, instead of installing a 3rd party agent for hardware monitoring, VMware introduced the Common Information Model or CIM. This allowed hardware data to be easily seen in vCenter server, and common hardware management platforms to access it via vCenter.

For a great overview of the CIM, be sure to check out this blog on VMware’s site. It is from 2011, and wonderfully explains this huge shift in ESX vs ESXi architecture.

Not only did this simplify management, but added to the stability and security of ESXi as a whole.

What is the VMkernel?

We’ve talked a lot about the VMkernel, which is the brains of ESXi.

I want to give you a simple overview so you can really begin to understand its capabilities.

Like I said, the VMkernel is the brains of the operation. It handles things like resource scheduling, and resource management.

The networking and storage stacks are also in the VMkernel, and the ESXi’s hosts device drivers are also handled by the VMkernel.

What are VMkernel Ports?

Back in the ESX days, we connected to our hosts with a special service console port. This was configured during the installation process of ESX, so we could connect to our hosts to continue to configure them and manage them.

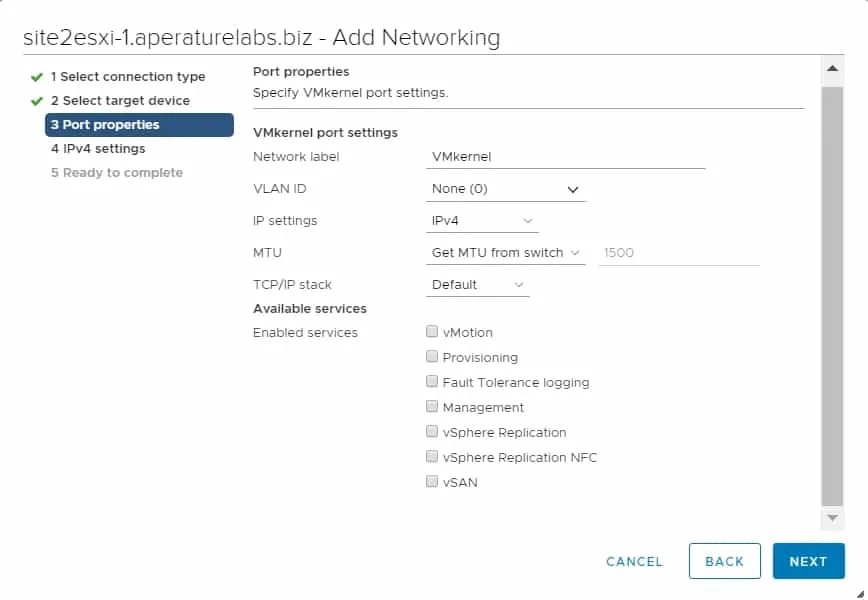

Starting with ESXi, we configured a VMkernel port for management. Today, VMkernel ports serve many more purposes than just management, as the vSphere product has advanced tremendously.

When ESXi first debuted, VMkernel ports were configured for Management, vMotion, and IP based storage if you were using it. If you are configuring a VMkernel port for storage, you simply don’t tell vSphere it is for a special purpose like those listed above. That’s because it goes over the default TCP/IP stack in vSphere.

VMkernel ports of course existed before ESXi, but unless you were using IP storage or vMotion (which in the early days believe it or not were not commonly used), you probably had not ever configured a VMkernel port before.

Today, as you can see there are many more applications for using VMkernel ports. You can read more about them in the VMkernel Networking Guide from VMware.

ESX vs ESXi Isn’t Even A Comparison

When ESXi first came out, many vSphere administrators, myself included, were obsessed with comparing ESX vs ESXi. We were just so used to the service console and our vSphere operations around it that it was a huge paradigm shift.

I remember I specifically used a tool in the service console to manage my large ESX environment. Back before ESXi, things like PowerCLI weren’t widely used because there was no reason to, you just used the esxcfg commands in the service console.

The VMware Infrastructure Toolkit (for Windows), or the VI Toolkit was the predecessor to PowerCLI wasn’t even released until July of 2008. In hindsight, you could see VMware setting the stage for the future of their platform, my assuring performance, securing the codebase, and streamlining manageability.

Let’s face it, ESX as we knew it wasn’t going to support the continue upwards trajectory of vSphere. VMware had to take steps to future proof their product for what was to come.

In vSphere 4, VMware offered both ESX and ESXi to allow customers to gradually make the shift, but when vSphere 5 came in 2011, ESXi became THE VMware vSphere hypervisor.

It was around this time we also saw tools like Auto Deploy emerge, and PowerCLI begin to become supercharged.

We also saw more and more features come to both ESXi and vCenter. If you compared ESX 4.1, which was the last version of ESX full form to ESXi 6.7 you would probably just laugh at ESX if your first version of vSphere was 6 or higher.

While it was clearly cutting edge when it first came out, the changes made to the ESX vs ESXi architecture set the stage for vSphere’s continued advancement and growth. Without this shift to ESXi, we just simply wouldn’t be where we are today.

Melissa is an Independent Technology Analyst & Content Creator, focused on IT infrastructure and information security. She is a VMware Certified Design Expert (VCDX-236) and has spent her career focused on the full IT infrastructure stack.